Chaine's Architecture

At chaine, we are using a combination of Domain Driven Design, CQRS and Event Sourcing.

Our architecture is entirely event-based. This means that different services or subsystems react to events. For example, if a user is created, the user-subsystem may decide to emit an event called 'userCreated'. Then any other subsystem that needs to know when a user is created (i.e. the chat subsystem), it will "react" to 'userCreated', listen in on when this event happens, and take the appropriate action it needs, such as create a user in the chat subsystem to be used for real-time chat purposes.

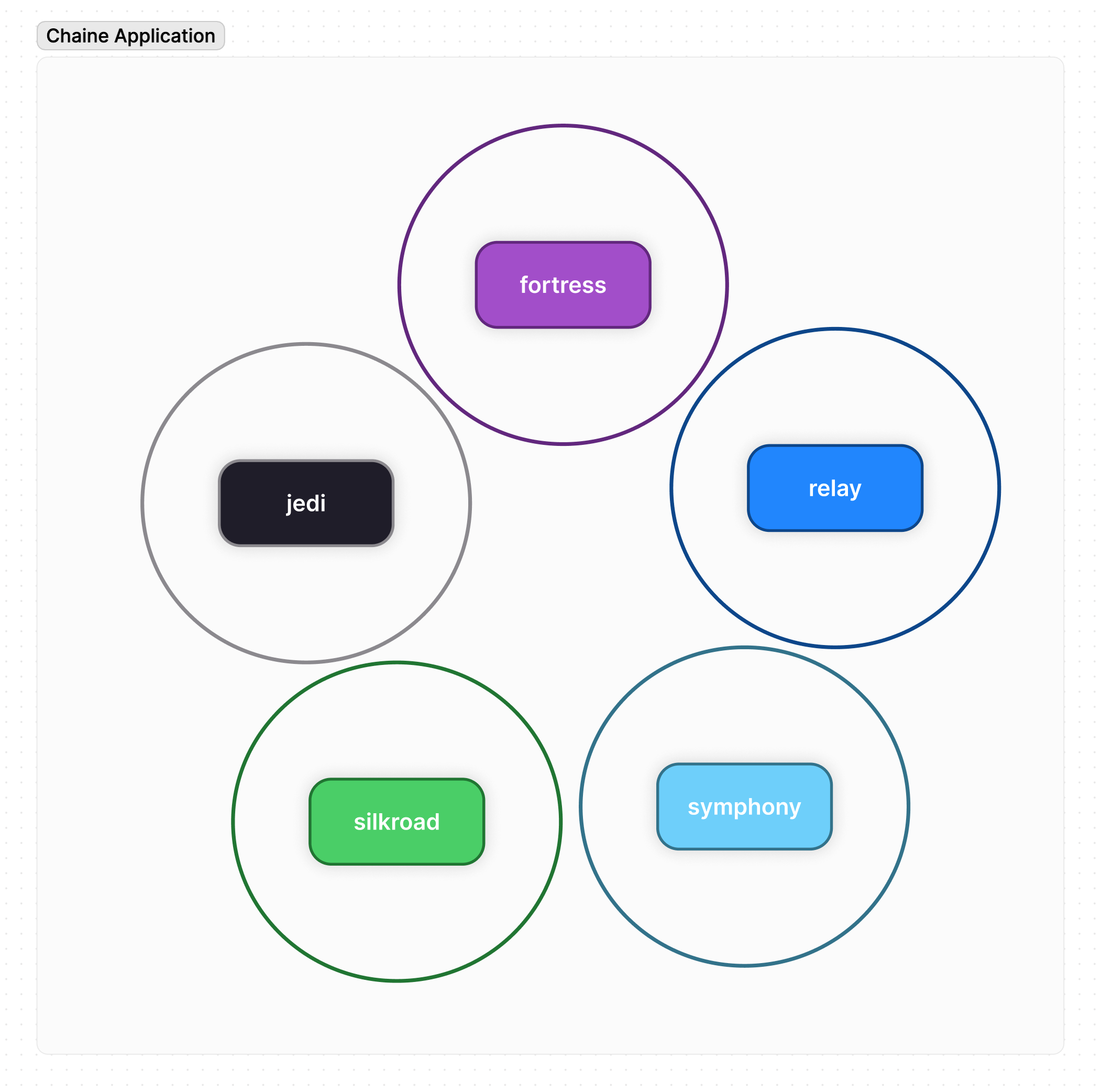

High-level design

Chaine consists of multiple autonomous services or "subsystems". You can think of each subsystem almost as its own stand-alone 'app' and it has responsibility for a specific 'domain'. Here are our current subsystem names:

Example of different subsystems

The responsibilities of each domain are:

- Fortress: Responsible for all things related to authentication, authorization, user management, user profiles, workspace management, etc. Although this is a very large domain, we have broken it down into multiple services:

- (bff)-user-workspace-management: responsible for all things related to users and workspaces. This includes user profiles, user management, workspace management, etc.

- (bff)-motor-carrier-management: responsible for all things related to motor carriers and how they relate to a Chaine workspace. The workspace verification is done here.

- (bff)-billing: responsible for all things related to platform usage as it pertains to billing. In the future, we will include subscription management, payment management, etc. here.

- Jedi: Responsible for all things related to shipment management and shipment tracking. This does not include a post (an available load for booking) as that is handled in Silkroad.

- (bff)-shipment-management: responsible for all things related to shipment management including creating a shipment, updating a shipment, etc.. This service is split into two parts:

- Command: The APIs to modify the shipment: create shipment, update shipment details/status/mode, add/remove/update stops, etc..

- Query: Responsible for all things related to querying the shipment and displaying it on the UI. The Query section listens in on all events that are generated from the command (shipmentCreated, shipmentUpdated, etc..) and saves the relevant details to be shown on the UI for the shipment only.

- (bff)-tracking: responsible for all things related to shipment tracking that is shown on the UI. This service listens in on events from jedi-esg-track-shipment and saves the relevant details to be shown on the UI for the shipment only. The shipment is deleted out of this service after a specific time (ttl).

- (esg)-track-shipment: Back-end service which handles tracking data coming from the chaine mobile app and other third-party devices such as ELDs and tracking devices such as Tive. This service will create a shallow copy of the shipment (by listening in on the shipmentCreated event) and also update/remove tracking sources (i.e. listens in on driverAssigned, driverUnassigned, truckTrailerAdded, truckTrailRemoved events).

- This service is responsible for determining when a shipment should start and stop trackng. See our When does tracking start and stop? docs.

- Mobile app events: The chaine mobile app emits various events including location and other user events. This service listens in on those events, determines whether a user is on a shipment, and if so, it emits the relevant events for other services to listen in on (particularly the bff-tracking service which is responsible to show tracking data to the UI).

- (esg)-track-container: Back-end service that has the logic to get the tracking from the various steam ship lines. This services manages which containers need tracking, manages the polling of the steam ship lines, and manages the parsing of the tracking data. This service also normalizes the container events to a standard chaine format. Additionally, this service will handle duplicate events if they are received from the steam ship lines.

- (bff)-shipment-management: responsible for all things related to shipment management including creating a shipment, updating a shipment, etc.. This service is split into two parts:

- Relay: Responsible for all things related to real-time chat. Relay manages and synces user creation and user profile updates with the chat subsystem. It also listens in on shipmentCreated, loadPostOfferMade events to create a chat room for the shipment or load post offer.

- publishing chat events: Relay also publishes chat events for other subsystems to listen in on. For example, if a new chat message is received, it emailts a chatMessageReceived event that other systems like our notification subsystem (Tachyon) can listen in on and decide whether a notification needs to be sent

Each autonomous service is highly protected from the implementation details of other subsystems, and no autonomous service access data directly from another service. This allows for a highly-decoupled system. When data is needed from another subsystem, it is done through reacting to events.

Each subsystem will emit specific events, and other subsystems will listen and react to events as they see fit.

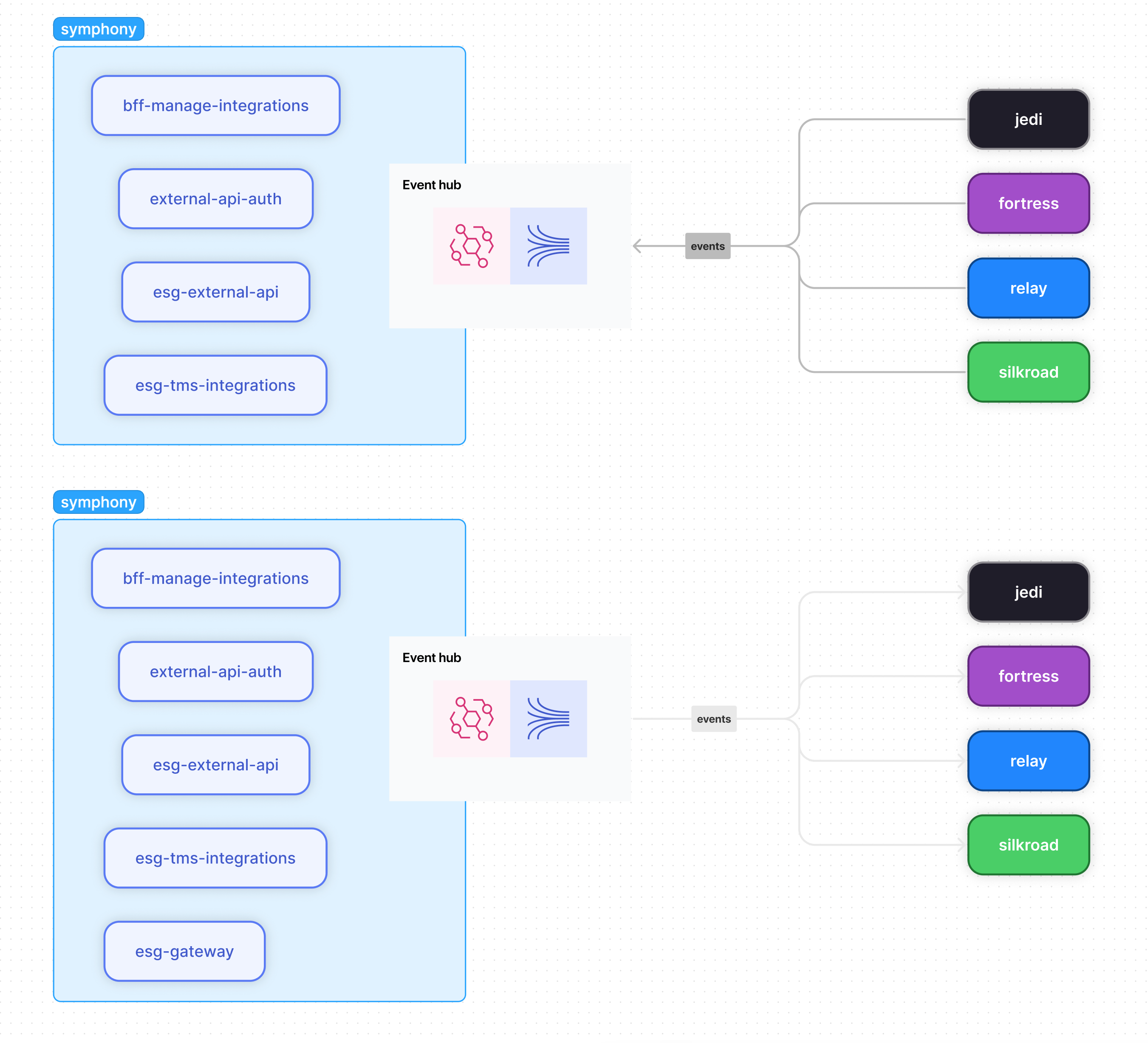

Events to and from a subsystem flow through AWS EventBridge

For more details on the flow of events, see the next section.

Packages and flow of events

Each subsystem will have a general package structure set up like this:

|__subsystemA

|__bff-1

|__bff-2

|__esg-gateway

|__esg-1

|__hub

|__events

A ESG and BFF is explained in detail in the autonomous services section.

- A subsystem may have multiple BFFs and ESGs.

- A subsystem will have only one esg-gateway, hub, and events.

esg-gateway: This package only has 2 responsibilities. 1. Take external events and convert them to an "internal" format for services inside of a subsystem to consume. 2. Take any internal events generated from any of the bffs or esgs, and convert them to an "external" format for other subsystems to use.hub: This package has the EventBridge and Kinesis Stream implementation along with the EventBridge Rules which control the flow of events.- Deploys the event bus using AWS EventBridge: Responsible for taking in all events either from any internal service or from other subsystem's EventBridge event buses.

- Deploys the subsystem's Kinesis Stream: The kinesis stream is responsible for high-throughput handling of all events (ones that come from external subsystems via the subsystem's event bus or events that are published from internal services to be used for internal services). See the flow of events section for more details.

- Configure event bus Rules: Rules are used to tell the event bus where to send each event. This is done by filtering based on a few fields in the event.

events: This is a types package which will contain all the event types for any internal event that is generated and any internal event converted to an external format.- This package is used by internal packages when a service in the subsystem is consuming events from another service in the subsystem.

- This package is also used by external subsystems, usually in the external subsystem's esg-gateway, when they are consuming an event from this subsystem and converting them to an internal format for their own subsystem.

Flow of events

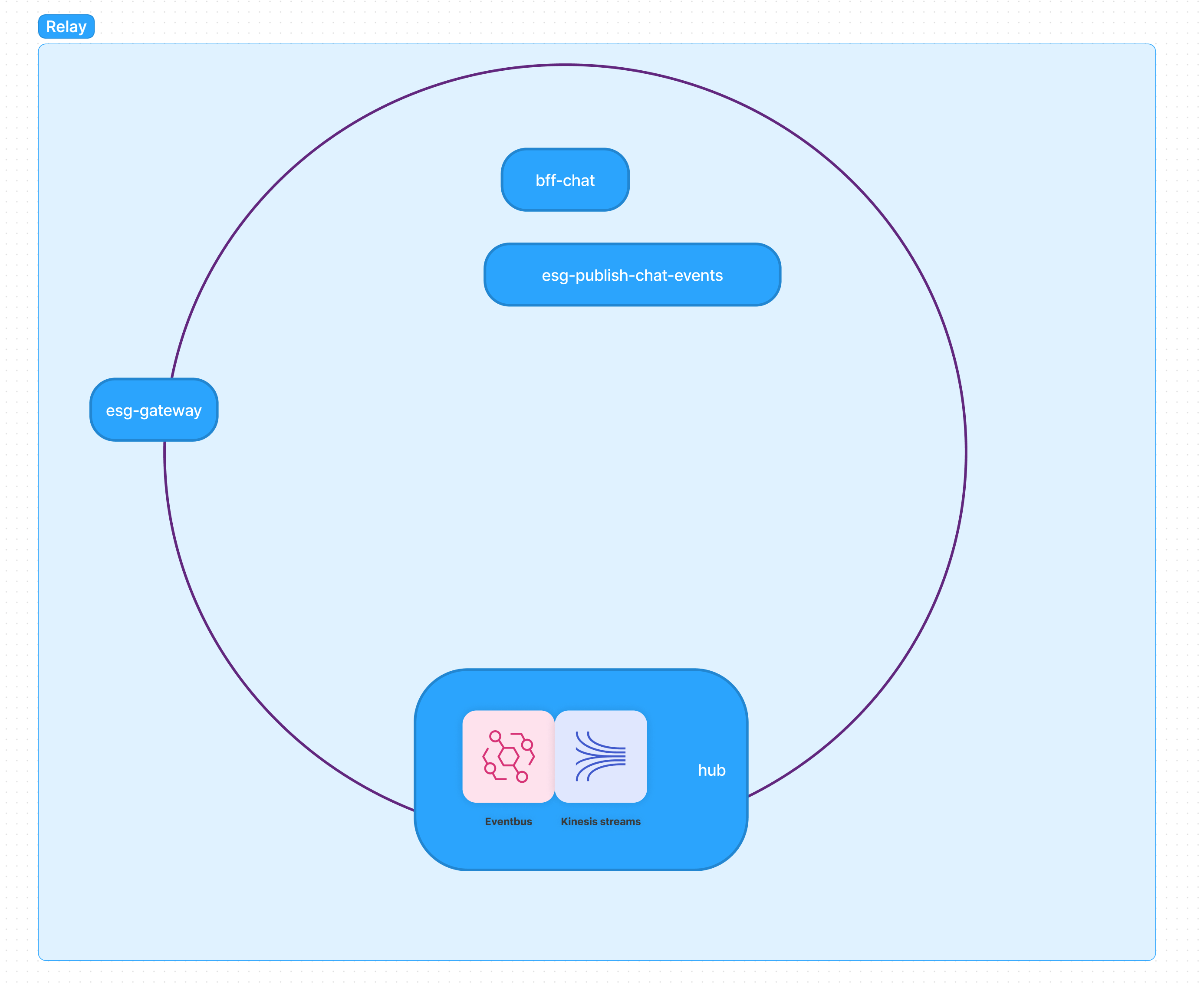

Let's look at an example repo, "relay" that has a basic structure as:

Relay packages

Subsystem Relay and its packages and services

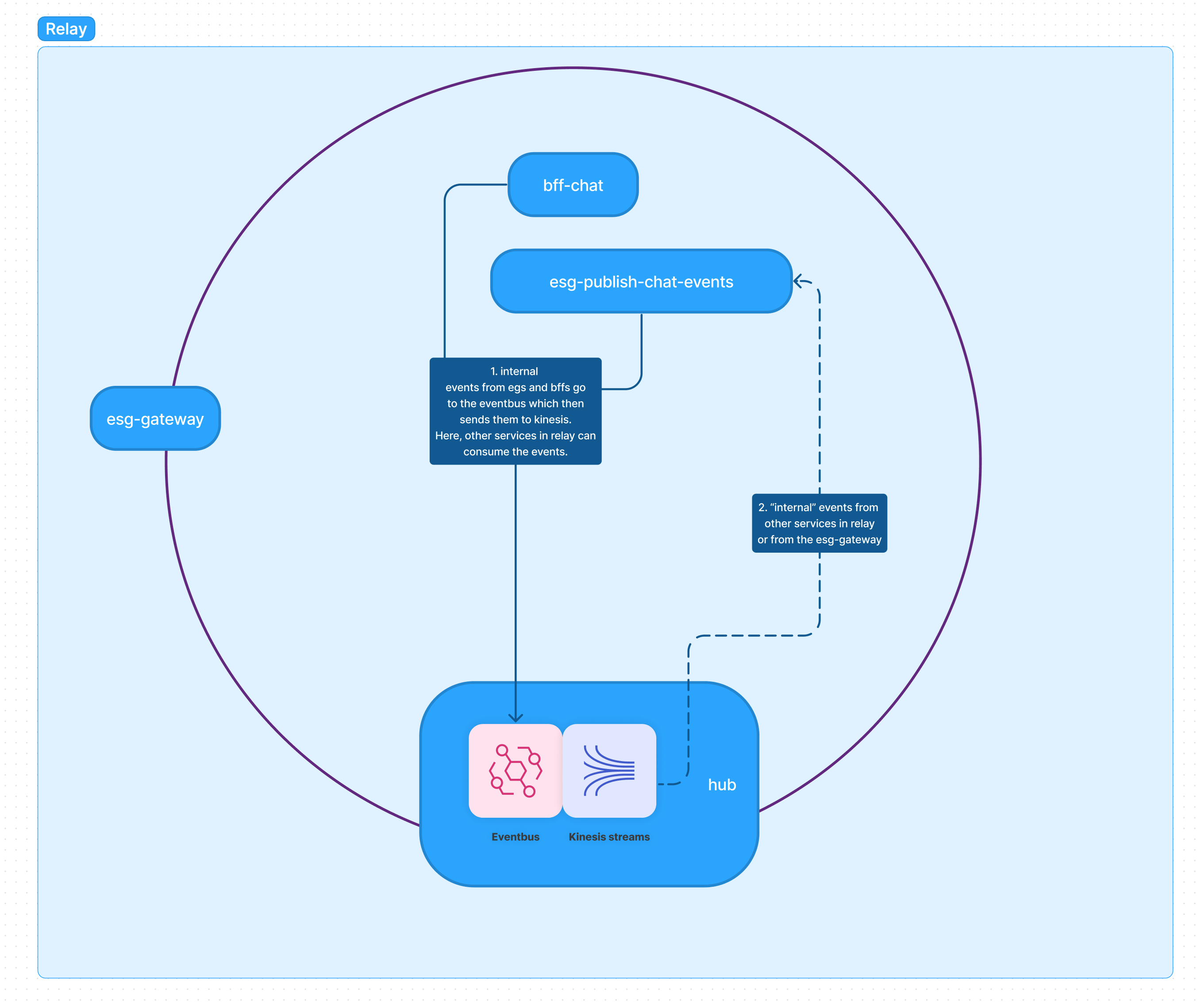

Domain events generated inside Relay for use in Relay

Services inside of relay can emit "internal" domain events for use in other services in relay. These are labelled as "internal" since right now, we have not published them to external subsystems. Each BFF or ESG can publish events to the event bus. There is a rule configured in the event bus to then publish all domain events that are "internal" to the subsystem's kinesis stream. Once all "internal" domain events are sent to kinesis, now any service inside of this subsystem can listen on the kinesis stream and consume the events they want.

Internal events published by Relay's services, and used by other services in Relay.

The flow is:

- Internal events from esgs and bffs go to the eventbus which then sends them to relay's kinesis stream via a Rule.

- These events are now emitted by kinesis and any service in relay can setup an ingress-listener. This listener is just a lambda that is configured to invoke from events from this kinesis stream. In the lambda function, we use the pipes and filters design pattern and can filter out only the events we want in a service.

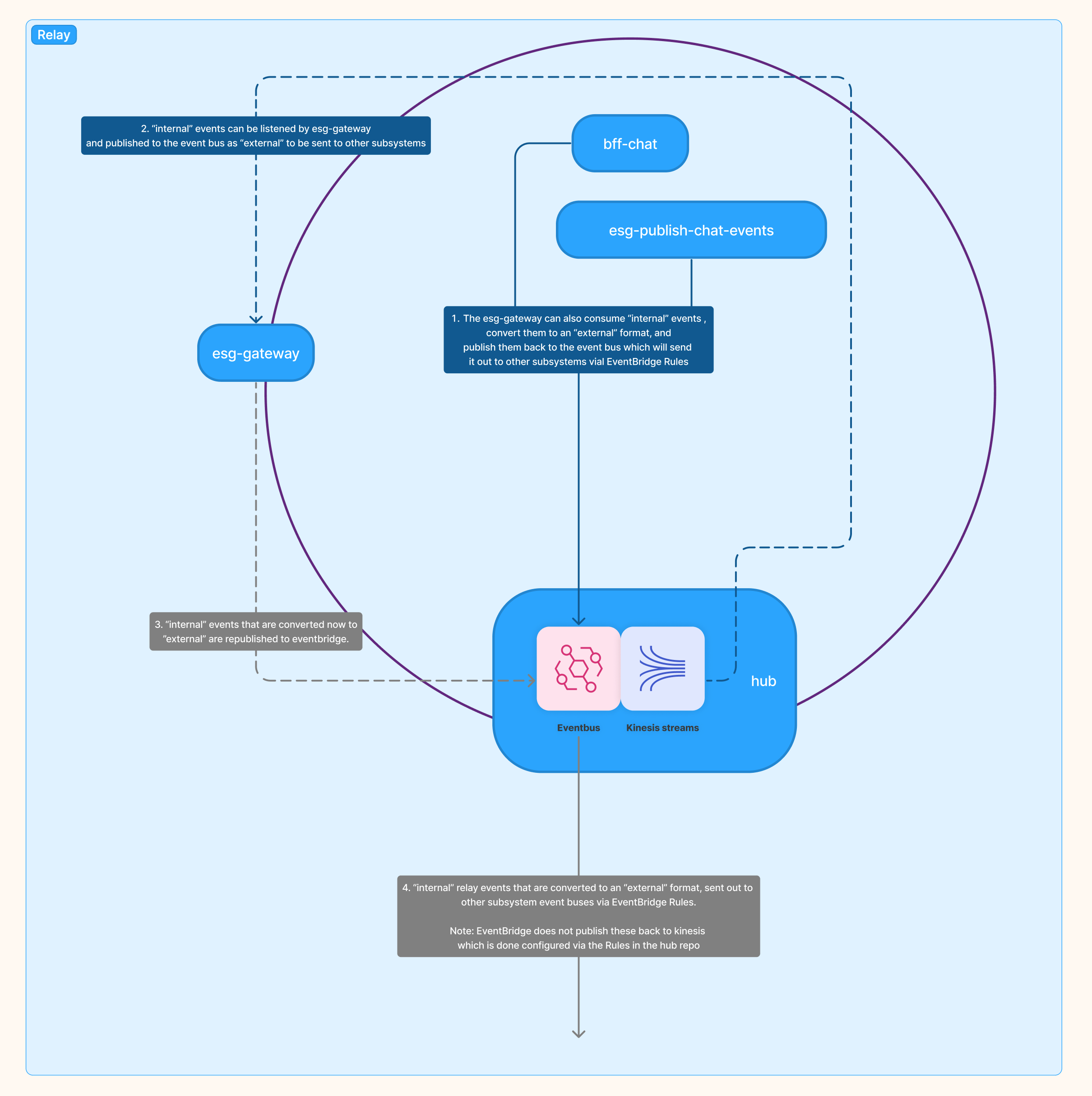

Domain events generated inside Relay for use in other Subsystems

Services inside of relay can emit "internal" domain events that can be used by other subsystems.

Subsystem Relay and its packages and services

The flow is:

- Internal events from esgs and bffs go to the eventbus which then sends them to relay's kinesis stream via a Rule.

- Relay's

esg-gatewaythen consumes these "internal" domain events and converts them to an "external" format. We do this so the external format has strong backward's compatibility since other subsystems will be using them. esg-gatewaypublishes the "internal" domain events which are now converted to an "external" format to Relay's event bus.- A Rule inside of Relay's event bus (configured in the

hubpackage) then publishes external domain events to other subsystems that want it.

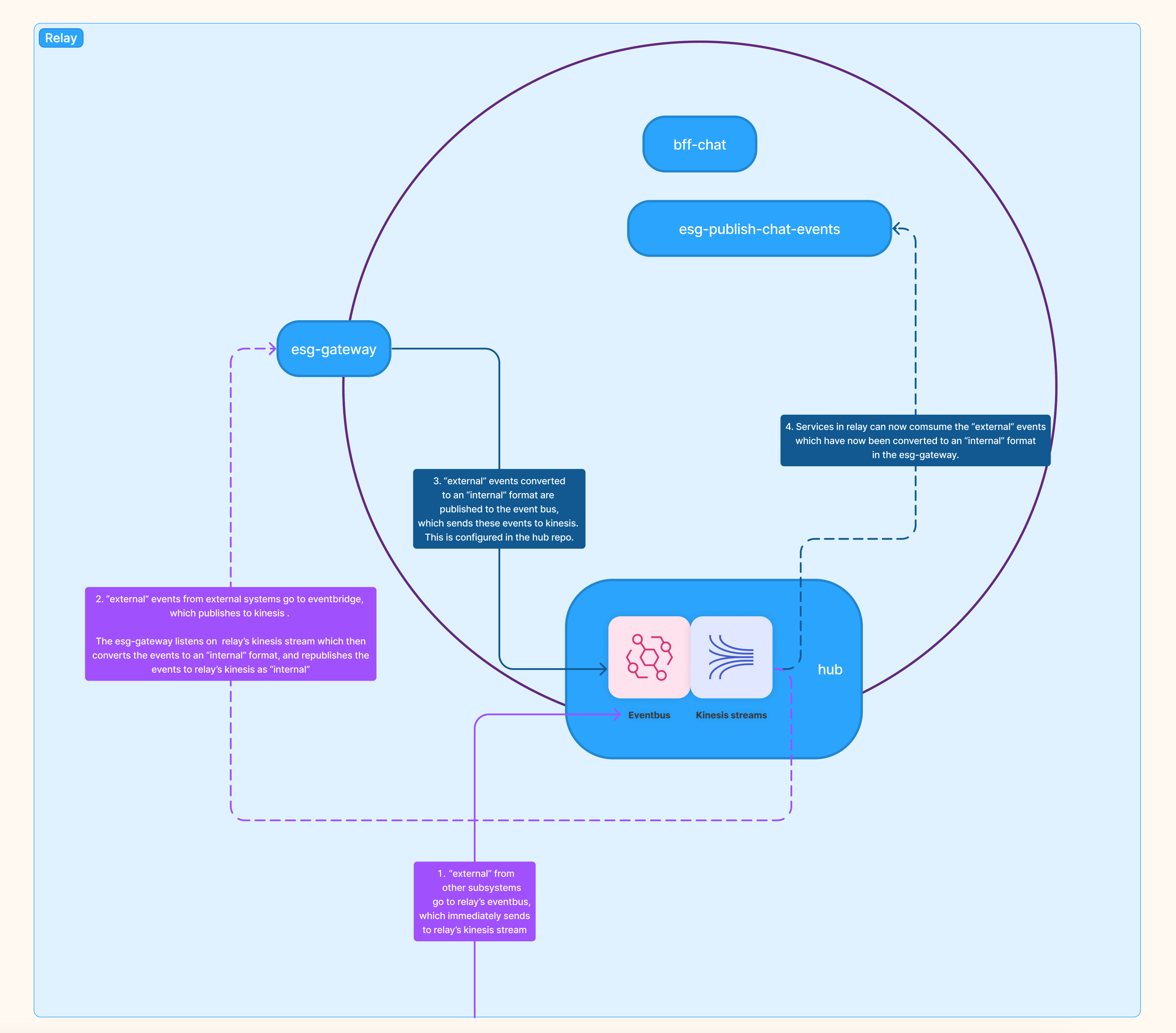

Domain events generated in other subsystem for use in Relay

Events that other subsystems emit can also be sent from an external subsystem's event bus to Relay's eventbus via a Rule (configured in the hub package).

Subsystem Relay and its packages and services

The flow is:

- External events other subsystems go to relay's eventbus (via a Rule setup in that external subsystem's

hubpackage). A Rule configured in Relay'shubthen immediately publishes these events to Relay's Kinesis Stream. - Relay's

esg-gatewaycan listen in on these events inside of its ingress-listener.esg-gateway's ingress-listener converts these "external" events to an "internal" format. esg-gatewaypublishes new events which are now an "internal" format back to Relay's event bus. A Rule inside of Relay's event bus (configured in thehubpackage) immediately sends these events to Relay's kinesis stream.- Services in relay can now comsume the “external” events which have now been converted to an “internal” format in the esg-gateway.

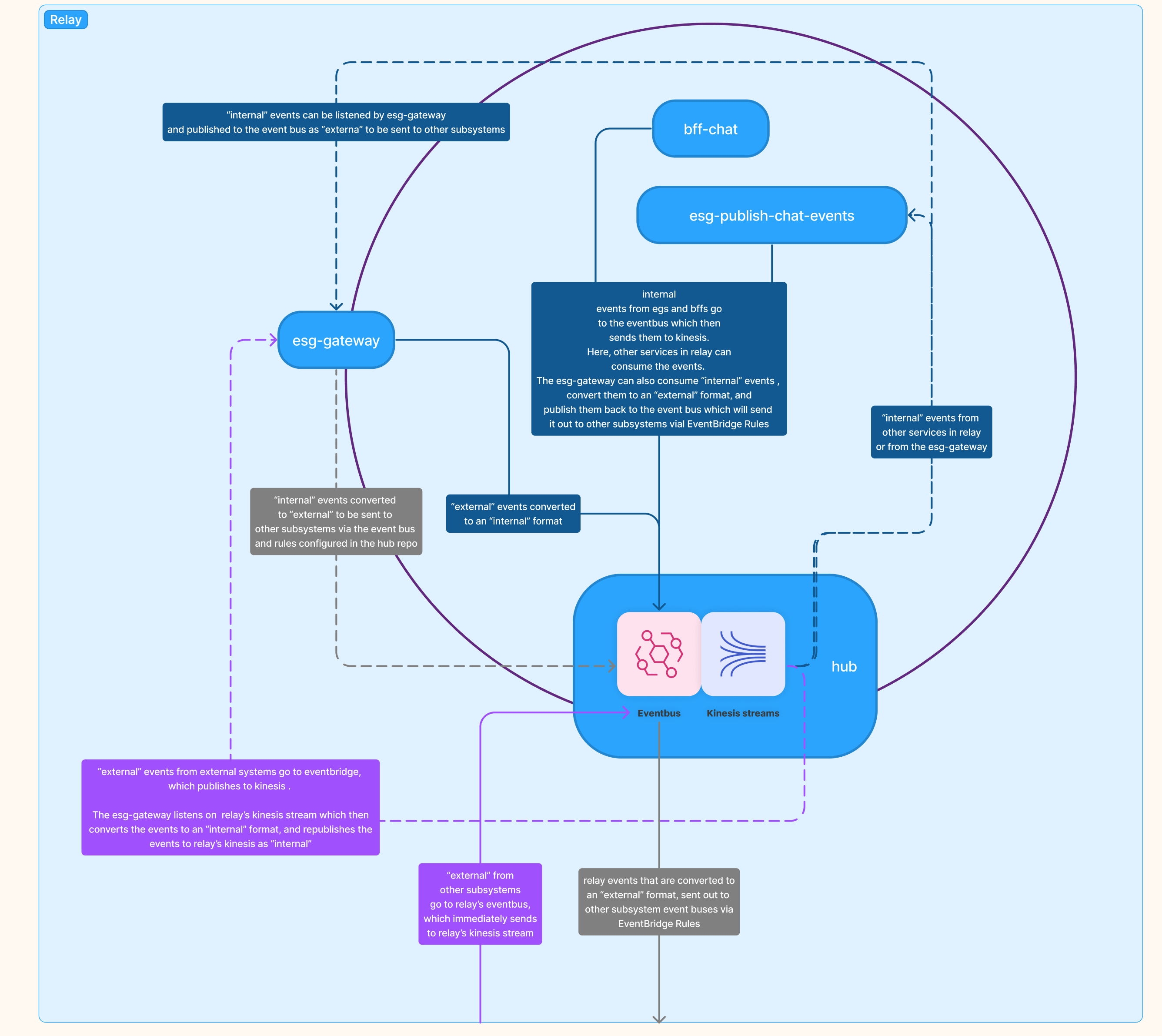

Summary of the flow of events

Here is the overall flow of all events from the previous steps.

- Blue lines:

- Solid lines: Events that are in some "internal" format that are published to Relay's event bus.

- Dotted lines: Events that are in some "internal" format that are used by services inside of Relay.

- Purple lines:

- Solid lines: Events that are "external" which come from external subsystem's event bus are sent to Relay's event bus via a Rule configured in the external event bus (in the

hubpackage of the external subsystem). - Dotted lines: Events that are in an "external" format from external subsystems. Only the

esg-gatewaylistens on these events to convert them to an "internal" format for Relay's services to use.

- Solid lines: Events that are "external" which come from external subsystem's event bus are sent to Relay's event bus via a Rule configured in the external event bus (in the

- Gray lines:

- Dotted lines: These are Relay's "internal" domain events which have been converted to an "external" format, and is sent to Relay's event bus.

- Solid lines: These are Relay's "internal" domain events which have been converted to an "external" format which are sent to other subsystems via Rules set up in Relay's `hub' package.

Subsystem Relay and its packages and services